|

||

|

DeepSeek model analysis and its applications in AI-assistant protein engineering

Synthetic Biology Journal

2025, 6 (3):

636-650.

DOI: 10.12211/2096-8280.2025-041

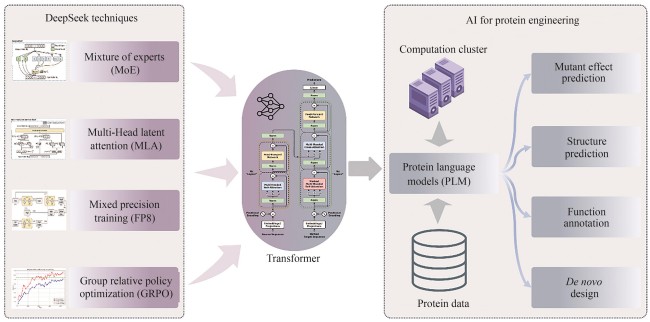

In early 2025, Hangzhou DeepSeek AI Foundation Technology Research Co., Ltd. released and open-sourced its independently developed DeepSeek-R1 conversational large language model. This model exhibits extremely low inference costs and outstanding chain-of-thought reasoning capabilities, performing comparably to, and in some tasks surpassing, proprietary models like GPT-4o and o1. This achievement has garnered significant international attention. Furthermore, DeepSeek’s excellent performance in Chinese conversations and its free-for-commercial-use strategy have ignited a wave of deployment and application within China, thereby promoting the widespread adoption and development of AI technology. This work systematically analyzes the architectural design, training methodology, and inference mechanisms of the DeepSeek model, exploring the transfer potential and application prospects of its core technologies in AI-assistant protein research. The DeepSeek model integrates several cutting-edge, independently innovated technologies, including a multi-head latent attention mechanism, mixture-of-experts (MoE) with load balancing, and low-precision training. These innovations have substantially reduced the training and inference costs for Transformer models. Although DeepSeek was originally designed for human language understanding and generation, its optimization techniques hold significant reference value for pre-trained language models with proteins, which are also based on the Transformer architecture. By leveraging the key technologies employed in DeepSeek, protein language models are expected to achieve substantial reductions in training and inference costs.

Fig. 2

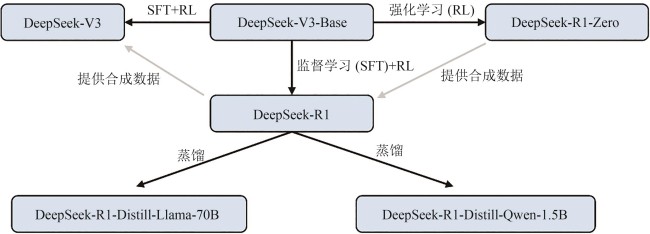

Relationship between DeepSeek-V3, V3-Base, R1 and R1-Zero series models

Extracts from the Article

以DeepSeek-V3模型和DeepSeek-R1模型的发布为标志,DeepSeek实现了将前期多项核心技术的整合,不仅显著提升了模型的生成质量,而且加速了模型的推理,相比于同样表现的其他模型具备更低的部署成本,引发了DeepSeek模型私有部署的热潮。DeepSeek-V3模型与DeepSeek-R1模型的主要区别在于R1模型具备深度思考(reasoning)的能力,回答准确度的表现更优。DeepSeek-V3和DeepSeek-R1拥有共同的训练起点——DeepSeek-V3-Base,DeepSeek-V3系列之间的关系如图2所示。

Other Images/Table from this Article

|